按之前设置成 LEARNING_RATE_BASE = 0.8,得到如下的结果: After 1 training step(s), loss on training batch is 4.17216. After 1001 training step(s), loss on training batch is 9.50182. After 2001 training step(s), loss on training batch is 8.4391. After 3001 training step(s), loss on training batch is 7.58759. After 4001 training step(s), loss on training batch is 6.84723. After 5001 training step(s), loss on training batch is 6.21202.

设置成 LEARNING_RATE_BASE = 0.01 得到如下的结果: After 1 training step (s), loss on trainning batch is 7.14841. After 1001 training step (s), loss on trainning batch is 0.7858. After 2001 training step (s), loss on trainning batch is 0.751647. After 3001 training step (s), loss on trainning batch is 0.723011. After 4001 training step (s), loss on trainning batch is 0.703585. After 5001 training step (s), loss on trainning batch is 0.667171.

P173,7.2.1 TensorFlow 图像处理函数 中的 FastGFile 的参数 ‘r’ 应该为 ‘rb’。

应该为:

tf.gfile.FastGFile(“/path/to/images”, ‘rb’)

如果不指定 b,会报这样错误:

‘utf-8’ codec can’t decode byte 0xff in position 0: invalid start byte

第六章 6.4.1 LeNet-5 模型,按照第 5 章 mnist_train.py 的参数,不能得到 Page 154 上面的输出。下载源码后发现,发现参数是修改过的,LEARNING_RATE_BASE 竟然是设置成了 0.01。

按之前设置成 LEARNING_RATE_BASE = 0.8,得到如下的结果:

After 1 training step(s), loss on training batch is 4.17216.

After 1001 training step(s), loss on training batch is 9.50182.

After 2001 training step(s), loss on training batch is 8.4391.

After 3001 training step(s), loss on training batch is 7.58759.

After 4001 training step(s), loss on training batch is 6.84723.

After 5001 training step(s), loss on training batch is 6.21202.

设置成 LEARNING_RATE_BASE = 0.01 得到如下的结果:

After 1 training step (s), loss on trainning batch is 7.14841.

After 1001 training step (s), loss on trainning batch is 0.7858.

After 2001 training step (s), loss on trainning batch is 0.751647.

After 3001 training step (s), loss on trainning batch is 0.723011.

After 4001 training step (s), loss on trainning batch is 0.703585.

After 5001 training step (s), loss on trainning batch is 0.667171.

请问第五章 最佳mnist中的inference函数,我运行的时候总是出现“Variable layer1/weights already exists, disallowed. Did you mean to set reuse=True in VarScope?”不知道如何修改,感谢回答

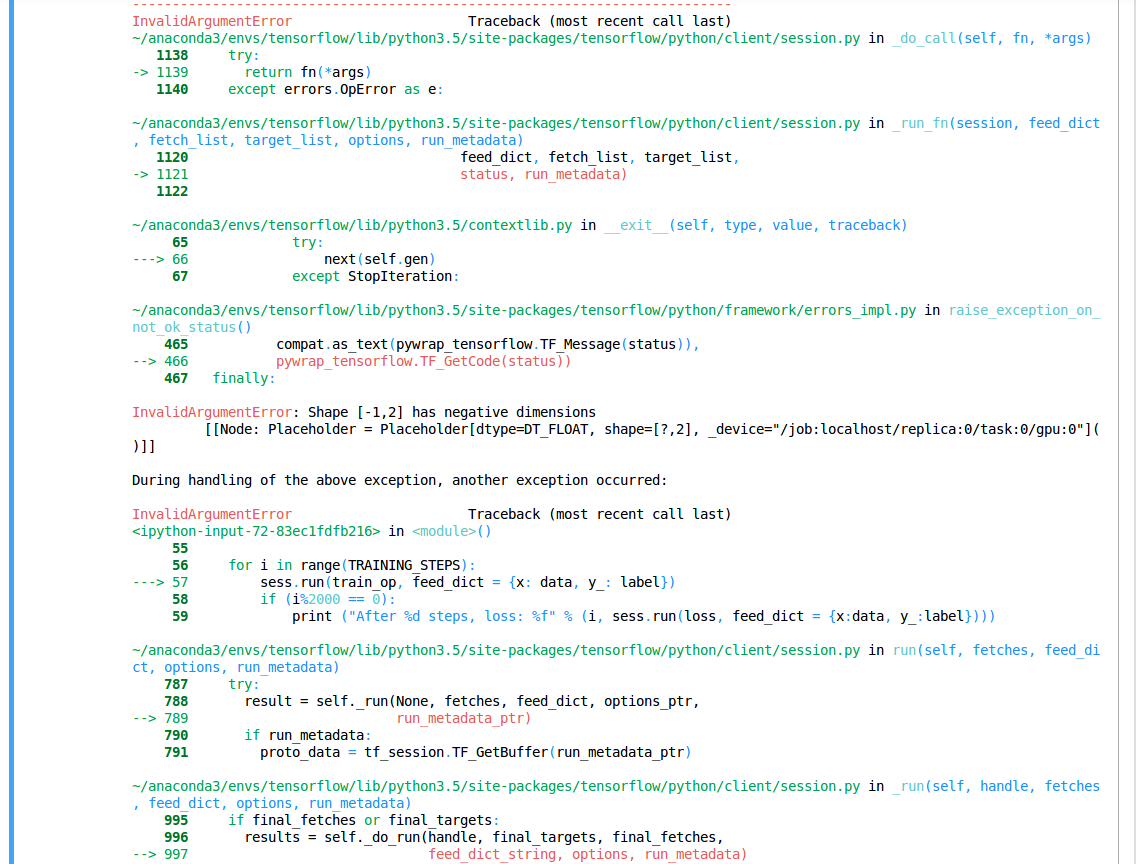

你好,在运行 第4章 深层神经网络 的github代码 3.正则化 出现了问题

问题出在第5部分 训练带正则项的损失函数loss

之前第4部分训练mse_loss 的时候没有任何问题

我也是这块卡住了,不知道怎么回事。

你好,在运行 第4章 深层神经网络 的github代码 3.正则化 出现了问题

问题出在第5部分 训练带正则项的损失函数loss

之前第4部分训练mse_loss 的时候没有任何问题,分割线也画了出来,到这一步就有问题了

完全对照给的1.0版本代码,并且网上别人使用相同的代码复制黏贴也是同样的问题

该如何修改?或者是跳过这一部分?thx

(我的tf是1.2版本)